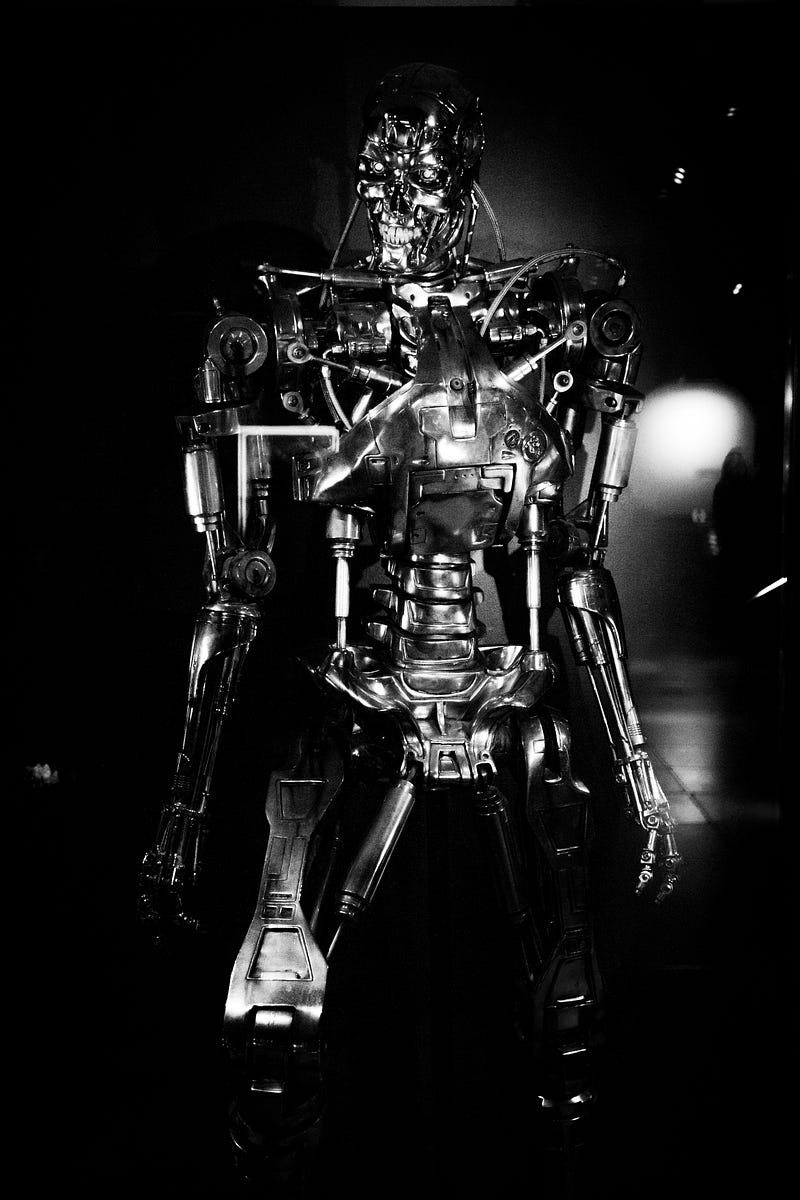

You’ve seen the Terminator movies — the AI apocalypse where Skynet becomes self-aware and turns the machines against humanity. It’s the stuff of nightmares and science fiction…or is it?

What if I told you that militaries around the world are already developing autonomous weapon systems powered by artificial intelligence — hunter-killer drones and robots that can identify, track, and destroy targets all on their own? No human in the loop, just algorithms calling the shots on who lives and dies.

Sound too far-fetched to be true? Think again. Buckle up, because we’re about to dive deep into the chilling reality of lethal autonomous weapons systems (LAWS) and the intense global debate around controlling this disturbing technology before it spins out of control.

Strap in and get ready for a wild ride as we explore:

.The capabilities of current and emerging AI weapon systems

. The benefits and risks of handing kill decisions over to machines

. Key technical challenges like machine bias, unpredictability, and lack of explainability

.Tough ethical quandaries with no clear answers

. The race between nations to set the rules before it’s too late

Brace yourself for a journey into the dark and contentious world of autonomous AI warfare. Just don’t have any nightmares about robot uprisings…

The AI Arms Race Heating Up

Imagine for a moment: A swarm of insect-sized killer drones, scouring a city for enemy combatants. No remote human pilots, just autonomous AI algorithms identifying threats, calculating risk, and making split-second decisions to deploy lethal force.

Chilling, right? Welcome to the future of warfare.

Artificial intelligence is upending the ancient rules of war, and battlefields may soon be prowled by intelligent killer robots that can outthink, outmaneuver, and outgun human soldiers. Defense leaders around the world are acutely aware of AI’s potential to revolutionize firepower. Russia, China, the United States, Israel, South Korea, and others are all pouring money into autonomous weapon development. It’s a disruptive, destabilizing arms race with no clear rules or boundaries.

How far is too far when it comes to ceding kill authority to machines?Autonomy is a game-changing, paradigm-shifting capability for any military. Why put soldiers’ lives at risk when you can deploy expendable uninhabited systems to overwhelm the enemy? A single commander could theoretically control a swarm of low-cost killer drones, striking multiple targets simultaneously with scant risk of casualties.

Think about it: An army of machines, relentless and fearless, controlled by a single mind. What’s more, AI weaponry could adapt and optimize in real-time based on the dynamics of the battlefield. With inhuman precision and processing power, they could rapidly analyze reams of data from battlefield sensors while out-performing human counterparts. We’d be up against a relentless, efficient, merciless killing machine without fear, fatigue, or any sense of ethics restraining it.

Ethics schmethics, go AI killbots!

Out of the realm of science fiction and into reality, militaries are already equipping drones with computer vision, autonomous navigation, and certain degrees of offensive autonomy. Israel’s Harop was an early example — an anti-radiation killer drone that could roam and strike targets using AI target recognition. Mass production means the era of cheap autonomous wingmen looms ahead.

…But at what cost to our humanity? You can’t put the AI genie back in the bottle once it’s loosed.

Still, the Pentagon and others contend that bringing AI into warfare is necessary and that autonomy has potential humanitarian upsides. The argument is that autonomous systems could make smarter decisions to avoid civilian casualties than human soldiers in the heat of battle. And an AI robotic wingman could provide precision firepower while keeping our troops safer.

But should we risk ceding kill decisions to software? Can we be sure the machines will follow our ethical values and rules of engagement? Let’s dig into the key risks and technical obstacles posed by AI killing systems…

What could possibly go wrong by giving weapons minds of their own?

The Danger of Algorithmic Bias in AI Weapons

Imagine a scenario where an AI-powered sentry gun at a checkpoint misjudges a human’s emotional state or body language because of biases in its training data. Someone gets killed by mistake based on a machine’s flawed analysis and overreaction.

Far-fetched? Not really. Bias is an enormous pitfall that reinforces skepticism of AI for high-stakes applications like lethal force. Machine learning is only as good as the information it’s trained on. If that data reflects human cultural prejudices, historical injustices, or other skewed information, the AI will pick up on and potentially amplify those same biases when making decisions.

For example, if an AI targeting system was trained mostly on imagery of military-age males from a certain ethnic group, it may learn to associate that demographic with hostile threats. Suddenly, an innocuous civilian becomes a high-value target to an AI whose analysis has become prejudiced.

Would you trust a biased machine to decide who lives and dies?

Do you think the public would accept an AI system killing civilians of a specific gender, race, or background because of data issues causing biased outputs? Probably not. Yet this is a very real danger as AI with incomplete or flawed training makes life-or-death choices.

If an AI weapon discriminates based on appearance or demographics, is that a bug or just it doing its biased job a little too well?

It’s a challenging issue because AI will inevitably absorb the prejudices present in real-world data. There have already been cases of commercial facial recognition systems doing a terrible job identifying ethnic minorities or women due to biases in training datasets.

But now just imagine the stakes when you switch that AI application from algo-shopping to algo-sniping. We simply can’t take chances on autonomous weapons systems discriminating against civilians or POWs in the field.

Can we ever eliminate discrimination from AI if it’s trained on imperfect human-generated data?

That means a tremendous amount of meticulous, unbiased training data and rigorous standards would be needed to weed out discrimination in AI kill systems. I’m talking volumes of richly annotated image, video, and sensor data capturing all the complexity and nuance of battle spaces.

Is it even possible to scrub all prejudice from the mind of a killer robot? We’ve never been able to fully erase unfair human biases. Letting a machine learning system make unilateral kill decisions based on incomplete, discriminatory training could prove catastrophic.

Does that make killer robots inherently unethical? A Machine’s Inscrutable Logic and the Black Box Problem

Let’s stay on the topic of trusting machines with matters of life and death. Even if we solved the problem of training AI to be free of discriminatory biases, there’s another imposing technical hurdle:

Explaining the logic and rationale of the AI’s decision-making in a clear, auditable way. In other words, opening up the “black box.”

Seems like a commonsense requirement, right? Think again.

What happens when this AI inevitably makes a bad call and civilians get killed? Can you just shrug, wave some math equations, and say “inscrutable black box reasons” in response? Not a great look.

There should be clear lines of responsibility and comprehensible explanations for any unintended harm caused by AI firepower. The challenge is that modern AI systems like deep neural networks are essentially opaque in their decision-making. We can see the training data going in and the outputs coming out, but the machine learning process in between is shrouded in brain-like complexity.

Would you trust an opaque, unexplainable AI to take human lives based on its obtuse robo-logic?

Even the engineers who create these AIs often can’t fully articulate the reasoning behind the system’s analysis of ambiguous information. It’s a black box of statistical algorithms detecting patterns within patterns — potentially surfacing biases or flaws we can’t audit or understand.

The quest to create explainable AI (XAI) that can rationalize its reasoning in human terms is an active field of research and development. The goal is AI systems that leave clear decision trails for auditing — whether it’s a neural network explaining each node’s logic in natural language, or some other method of peering under the hood.

Can we ever truly understand the mind of a killer robot?

DARPA and others are hard at work trying to crack this XAI challenge, but progress is slow and many question whether we can ever achieve full explainability with advanced AI. It may just be too alien for our puny human brains.

The Unpredictability of AI on the Battlefield. Imagine an autonomous drone tasked with patrolling a war zone. It identifies a suspicious vehicle and decides to launch a missile strike. But the “suspicious vehicle” turns out to be a civilian bus filled with refugees. The AI made a lethal mistake. How do we explain that? Who takes the blame?

Are we ready for such moral and ethical quagmires?

This isn’t just a hypothetical scenario. AI systems, no matter how advanced, can and do make mistakes. The battlefield is chaotic, filled with variables and unpredictability that even the most sophisticated AI might misinterpret. Unlike humans, AI lacks common sense, intuition, and the ability to understand context deeply. It processes data, makes decisions based on patterns, but it doesn’t “understand” in the way humans do. This fundamental gap can lead to catastrophic errors, especially in the high-stakes environment of warfare. Would you trust a machine with no intuition or understanding to decide who lives and dies?

The Ethical Quagmire of Autonomous Weapons

The ethical implications of autonomous weapons are profound and unsettling. Should machines have the power to make life-or-death decisions? Can we trust them to uphold human values and laws of war?

What happens when our creations start making ethical decisions? Ethicists argue that delegating kill decisions to machines is a fundamental breach of morality. There is an inherent human responsibility in taking life

that machines cannot comprehend. Warfare involves complex judgments about intention, proportionality, and discrimination that AI is currently ill-equipped to handle.

Can we ever program machines to understand the value of human life?Moreover, if an autonomous weapon makes a mistake or commits a war crime, accountability becomes a murky issue. Who do we hold responsible? The programmer, the manufacturer, the military commander, or the machine itself?

Is it ethical to allow machines to decide human fate? These questions don’t have easy answers, but they need to be addressed before autonomous weapons become a ubiquitous reality on the battlefield. The international community is grappling with these issues, with many calling for a ban on fully autonomous weapons. However, others argue that regulation, not prohibition, is the way forward.

Is regulation enough to prevent potential AI atrocities? The development of autonomous weapons is accelerating, and the international community is in a race against time to establish norms and regulations. The stakes are high, and the potential consequences are dire.

Are we ready to face the consequences of our technological advancements?Nations are grappling with the balance between gaining a strategic military advantage and ensuring ethical and moral responsibility. The path forward is fraught with challenges, but one thing is clear: the decisions we make today will shape the future of warfare and humanity.

Can we afford to get this wrong?

The rise of AI-powered killer robots is not just a plot for science fiction. It’s an imminent reality that poses profound ethical, technical, and strategic challenges. As we march towards an autonomous future, we must carefully weigh the benefits and risks, ensuring that we don’t lose our humanity in the process.

Will we be able to control our creations, or will they control us? The clock is ticking, and the decisions we make today will determine whether we face a dystopian future or harness AI for a safer, more ethical world. Are we ready to take on this challenge?